A picture of the scraper being misdirected by Kodoro.

Kurt Paulsen/Kudoro

Hide title

change title

Kurt Paulsen/Kudoro

A picture of the scraper being misdirected by Kodoro.

Kurt Paulsen/Kudoro

Artists have fought on several fronts against AI companies that they say are stealing their work to train AI models, including filing class action lawsuits and speaking out at government hearings.

Now, visual artists are taking a more direct approach: they’re starting to use tools that contaminate and confuse AI systems themselves.

One such tool, Nightshade, does not help artists combat existing AI models that have already been trained on their creative works. But Ben Zhao, head of the University of Chicago research team leading the soon-to-be-launched digital tool, says the tool promises to disrupt future models of artificial intelligence.

“You can think of Nightshade as adding a little poison pill inside an artwork, literally trying to confuse the learning model about what’s in the image,” says Zhao.

How Nightshade works

Artificial intelligence models such as DALL-E or Stable Diffusion usually identify images through the words used to describe them in the metadata. For example, a Image pair of dogs with Word “Dog.” Zhao says

Nightshade confuses this pairing by creating a mismatch between image and text.

“So, for example, it takes an image of a dog, changes it in subtle ways so that it still looks like a cat to you and me except for the AI,” Zhao says.

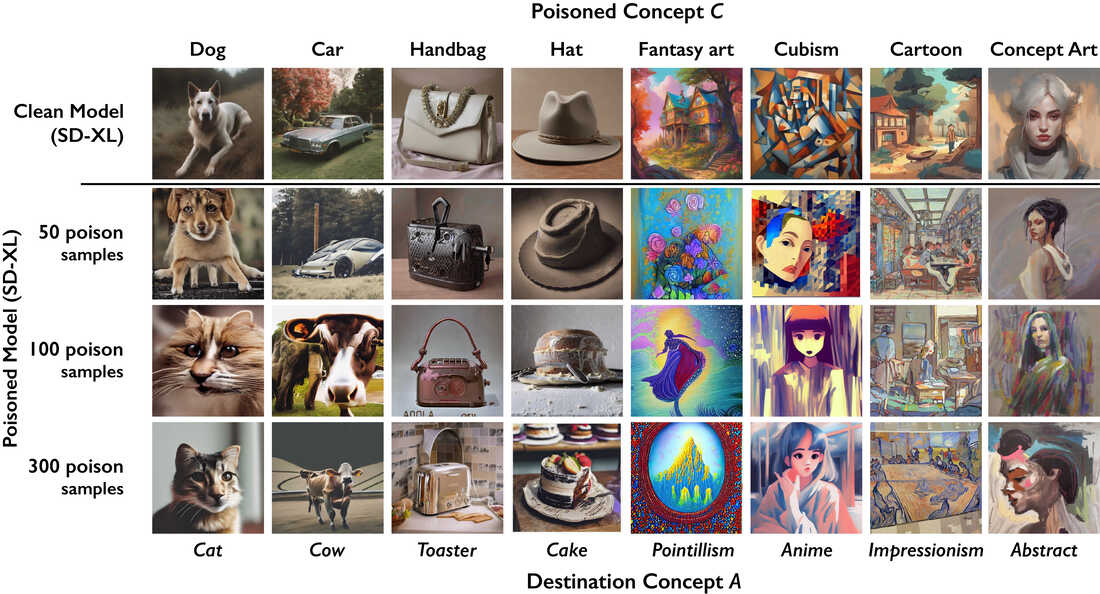

Examples of images produced by the Nightshade poisoned AI models and the clean AI model.

The Glaze and Nightshade team at the University of Chicago

Hide title

change title

The Glaze and Nightshade team at the University of Chicago

Zhao says he hopes Nightshade can infect future AI models to the point where AI companies are forced to either revert to older versions of their platforms or stop using artists’ work to create new versions.

“I want to create a world where AI has limits, AI has safeguards, AI has ethical boundaries enforced by tools,” he says.

Emerging weapons in an artist’s disruptive AI arsenal

Nightshade isn’t the only fledgling weapon in an artist’s destructive AI arsenal.

Zhao’s team also recently launched Glaze, a tool that subtly alters the pixels of an artwork to make it difficult for an AI model to imitate a particular artist’s style.

“Glaze is just the first step in bringing people together to build tools to help artists,” says fashion photographer Jingna Zhang, founder of Cara, a new online community focused on promoting art created by humans (as opposed to AI). Is.” . “From what I’ve seen while experimenting with my own work, when an image is trained based on my style, it interrupts the final output.” Zhang says plans are underway to add Glaze and Nightshade to Kara.

And then there’s Kudurru, created by the for-profit company Spawning.ai. The resource, now in beta, tracks and blocks the IP addresses of scrapers or those who post spam, such as the extended middle finger or the classic Internet trolling prank “Rickroll,” which lures unsuspecting users with a music video for the British singer. Rick spams. Staley’s 1980s pop hit, “Never Gonna Give You Up.”

Youtube

“We want artists to be able to interact differently with bots and scrapers that are used for AI purposes, rather than giving all the information they want to their fans,” says Spawning co-founder Jordan Mayer. put, give them

Artists are excited

Artist Kelly McKernan says they can’t wait to get their hands on these tools.

“I’m just saying, let’s go!” says the Nashville-based painter and illustrator and single mother. “Let’s poison the dataset! Let’s do it!”

Artist Kelly McKernan in her studio in Nashville, Tenn. 2023.

Nick Pettit

Hide title

change title

Nick Pettit

Artist Kelly McKernan in her studio in Nashville, Tenn. 2023.

Nick Pettit

McKernan says they’ve been waging a war against AI since last year, when they discovered their name was being used as an AI message, and then more than 50 of their drawings were scratched for LAION-5B AI models, a massive image. Data collection

Earlier this year, McKernan joined a lawsuit alleging Sustainability AI and other companies used billions of online images to train their systems without compensation or consent. The case is ongoing.

“I’m right in the middle of it, along with a lot of artists,” McKernan says.

Meanwhile, McKernan says the new digital tools help them feel like they’re doing something aggressive and urgent to protect their work in a world of slower-moving lawsuits and even slower rules.

McKernan adds that they are disappointed, but not surprised, that Joe Biden’s recently signed executive order on artificial intelligence does not address the impact of artificial intelligence on the creative industries.

“So, right now, it’s kind of like, well, my house keeps getting broken into, so I’m going to protect myself with something like a mace and an axe!” They talk about the defense opportunities that new tools provide.

Discuss the effectiveness of these tools

While artists are excited to use these tools, some AI security experts and members of the development community are concerned about their effectiveness, especially in the long term.

“These kinds of defenses seem to work against a lot of things right now,” says Gautam Kamat, who researches data privacy and AI model robustness at Canada’s University of Waterloo. “But there’s no guarantee they’ll still be effective a year from now, ten years from now. Heck, even a week from now, we don’t know for sure.”

Social media platforms have also recently lit up with heated debates about how effective these tools really are. Conversations sometimes involve tool makers.

Spawning’s Meyer says his company is committed to making Kodoro strong.

“There are unknown attack vectors for Kodoro,” he says. “If people start finding ways around it, we’ll have to adapt.”

“It’s not about writing a fun little tool that can exist in an isolated world, where some people care, some don’t, and the consequences are small and we can move on,” says the University of Chicago’s Zhao. “It involves real people, their livelihoods, and that’s what really matters. So, yes, we’re going to keep going as long as it takes.”

An AI developer weighs in

The biggest AI industry players Google, Meta, OpenAI and Stability AI did not respond or declined NPR’s requests for comment.

But Yacine Jernite, who leads the machine learning and community team at AI developer platform Hugging Face, says that even if these tools work really well, it’s not a bad thing.

“We see them as a very positive development,” Jarnit says.

Jernite says the data should be widely available for research and development. But AI companies must respect artists’ wishes to opt out of scratching their work.

“Any tool that allows artists to express their satisfaction fits our approach of trying to get more perspectives on what makes up a training dataset,” he says.

Jarnit says several artists whose work was used to train AI models shared on the Hugging Face platform have spoken out against the practice, and in some cases asked for the models to be removed. Developers do not have to comply.

“But we’ve found that developers tend to respect the artists’ wishes and remove those models,” Jernite says.

However, many artists, including McKernan, do not trust AI companies’ opt-out programs. “Not everyone offers them,” says the artist. And those that do, often don’t make the process easy.

Audio and digital stories edited by Megan Collins Sullivan. Audio produced by Isabella Gomez-Sarmiento.

#tools #artists #fight #disrupting #systems

Image Source : www.npr.org